Welcome back, savvy investors and AI enthusiasts! 🚀 Our journey into Temporal Fusion Transformers (TFTs) and their application in stock market prediction continues. In this installment, we’ll demystify the key components of TFTs, and discuss how they can be implemented using renowned machine learning libraries such as TensorFlow and PyTorch. Let’s dive in!

Unfolding the Secrets of Temporal Fusion Transformers

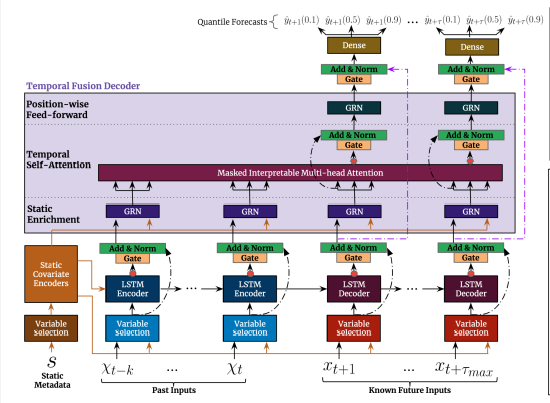

A TFT is composed of several critical components:

-

Data Input Layer: This is the entry point of your time series data into the model. It accepts the raw input data and gets the process started.

-

Input Encoding Layer: This layer transforms the raw data using time-based and variable-based encoding. Time-based encoding captures the temporal patterns, while variable-based encoding acknowledges the unique attributes of each variable.

-

Variable Selection Layer: As the name suggests, this layer determines the most significant variables at each time step based on the model’s current state. For example, if the model’s state indicates that the trading volume will have a larger impact on future stock prices, the variable selection layer will give it higher priority.

-

Temporal Self-Attention Mechanism: This mechanism allows the model to assign ‘weights’ to different time steps based on their importance for making predictions. For instance, the stock price a day ago might be deemed more important than the stock price a week ago when predicting tomorrow’s price.

-

Gated Linear Unit (GLU) Layer: The GLU layer receives the output from the self-attention mechanism and processes it further. This processing ensures that the most relevant information is preserved and carried forward in the model.

-

Skip Connection: This component takes the output from the GLU layer and combines it with the output from the input encoding layer. This ‘skipping’ action helps retain information from earlier layers and prevent the problem of vanishing gradients, which can occur in deep neural networks.

-

Fully Connected Layer: The final stage of the TFT. This layer takes the combined output from the skip connection and generates the final output of the model, i.e., the prediction.

In the forthcoming articles, we’ll dig deeper into each of these components, offering a comprehensive view of the inner workings of TFTs.

Implementing Temporal Fusion Transformers

To implement TFTs, one can leverage popular machine learning libraries like TensorFlow, developed by Google, and PyTorch, created by Facebook’s AI Research lab. The first step is data preparation, which involves arranging our data into a time series format that the TFT can use for training. This data could include historical stock prices, trading volumes, and other pertinent financial data.

Once our model is trained, we can employ it for making predictions. By inputting new sequences of data, the model will output its prediction for future values.

The Future of AI in Finance

TFTs signify a remarkable breakthrough in time series forecasting. They allow investors to predict future stock prices with higher accuracy, providing a critical edge in the market. However, the potential of AI in finance goes beyond just stock market prediction. It can be utilized to optimize trading strategies, detect fraudulent activities, automate financial planning, and much more.

We’ve now dissected the mechanics of Temporal Fusion Transformers and touched upon their implementation using Python and popular machine learning libraries. This should give you a robust foundation for understanding and using these potent models in your work.

In the next post, we’ll explore more sophisticated aspects of TFTs and examine real-world examples of their use in stock market prediction. So, keep learning, keep exploring, and stay tuned for the next part of our AI for Stock Market Prediction series! 🚀

See you in the next post!

Follow us here: @GentJayson